STR-Match: Matching SpatioTemporal Relevance Score for Training-Free Video Editing

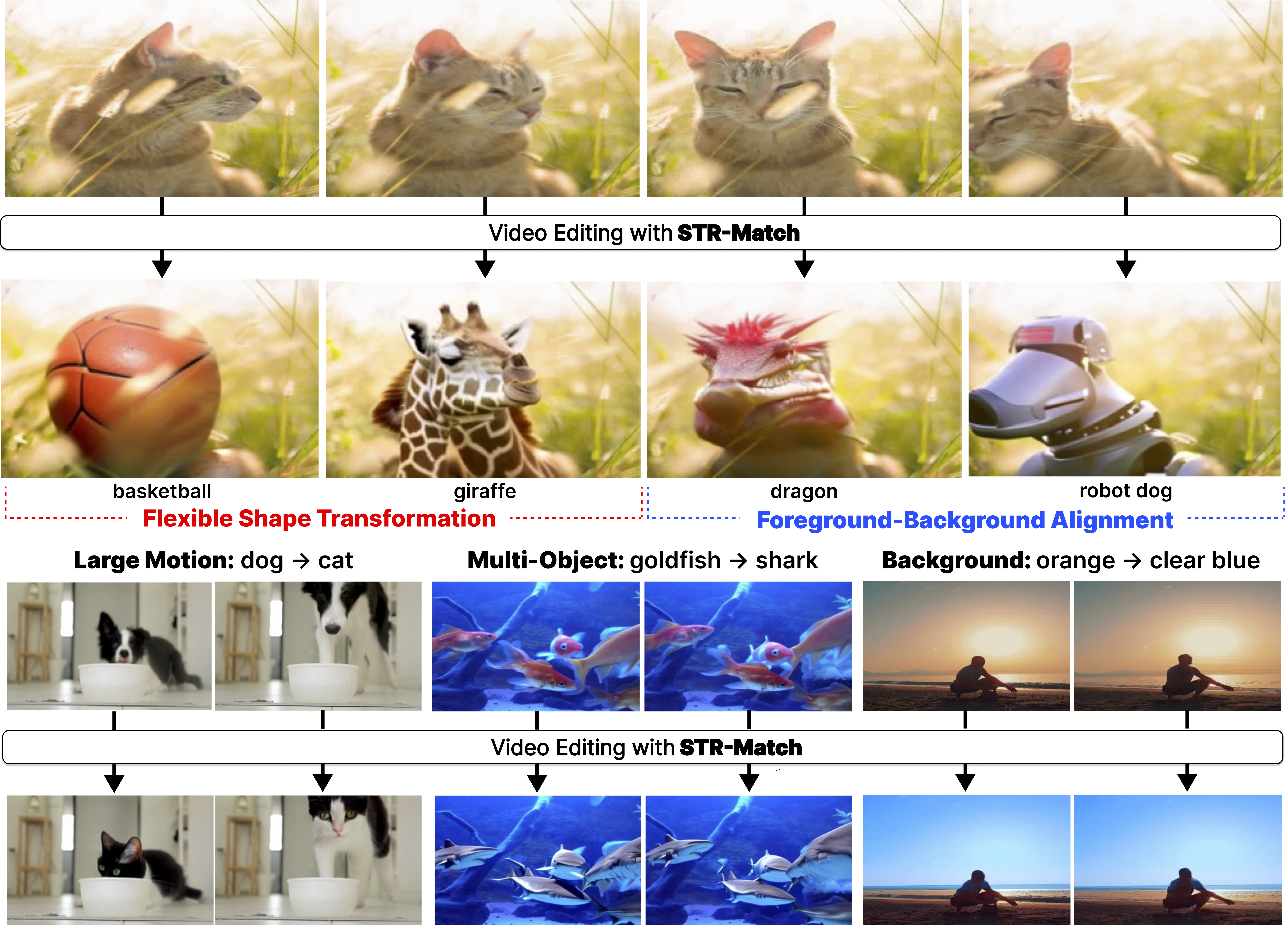

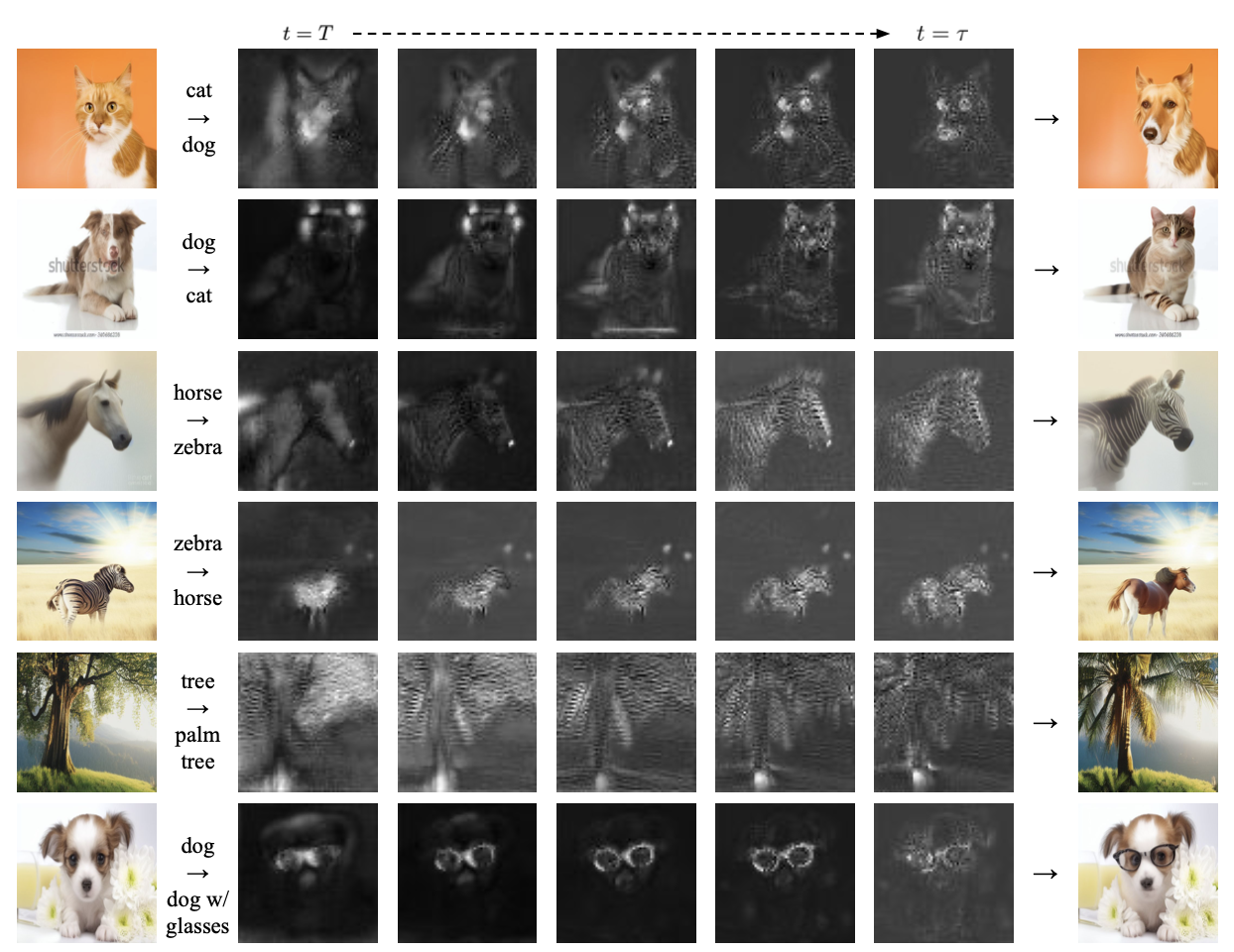

Previous text-guided video editing methods often suffer from temporal inconsistency, motion distortion, and-most notably-limited domain transformation. We attribute these limitations to insufficient modeling of spatiotemporal pixel relevance during the editing process. To address this, we propose STR-Match, a training-free video editing algorithm that produces visually appealing and spatiotemporally coherent videos through latent optimization guided by our novel STR score. The score captures spatiotemporal pixel relevance across adjacent frames by leveraging 2D spatial attention and 1D temporal modules in text-to-video (T2V) diffusion models, without the overhead of computationally expensive 3D attention mechanisms. Integrated into a latent optimization framework with a latent mask, STR-Match generates temporally consistent and visually faithful videos, maintaining strong performance even under significant domain transformations while preserving key visual attributes of the source. Extensive experiments demonstrate that STR-Match consistently outperforms existing methods in both visual quality and spatiotemporal consistency.

Low-Resolution Editing is All You Need for High-Resolution EditingUnder Review , Nov 2025* Equal Contribution

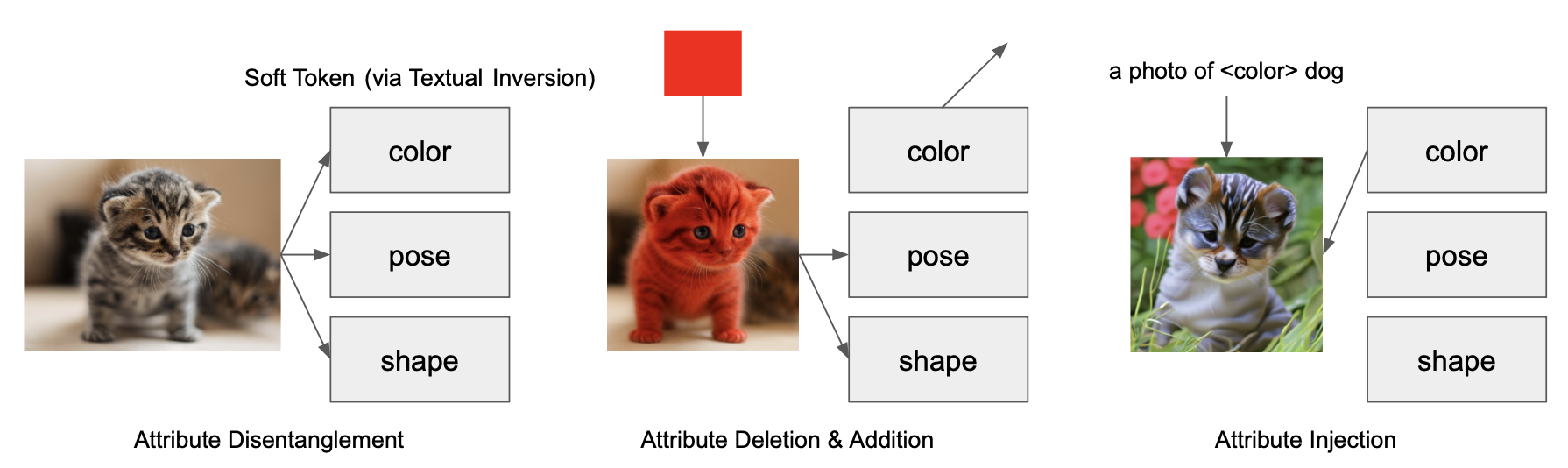

Low-Resolution Editing is All You Need for High-Resolution EditingUnder Review , Nov 2025* Equal Contribution Multiple-Attribute Disentanglement in Personalization on VLM ModelsWorking in Progress , Nov 2025

Multiple-Attribute Disentanglement in Personalization on VLM ModelsWorking in Progress , Nov 2025